Improving equilibrium propagation without weight symmetry through Jacobian homeostasis

Laborieux, A., & Zenke, F., ICLR, 2024

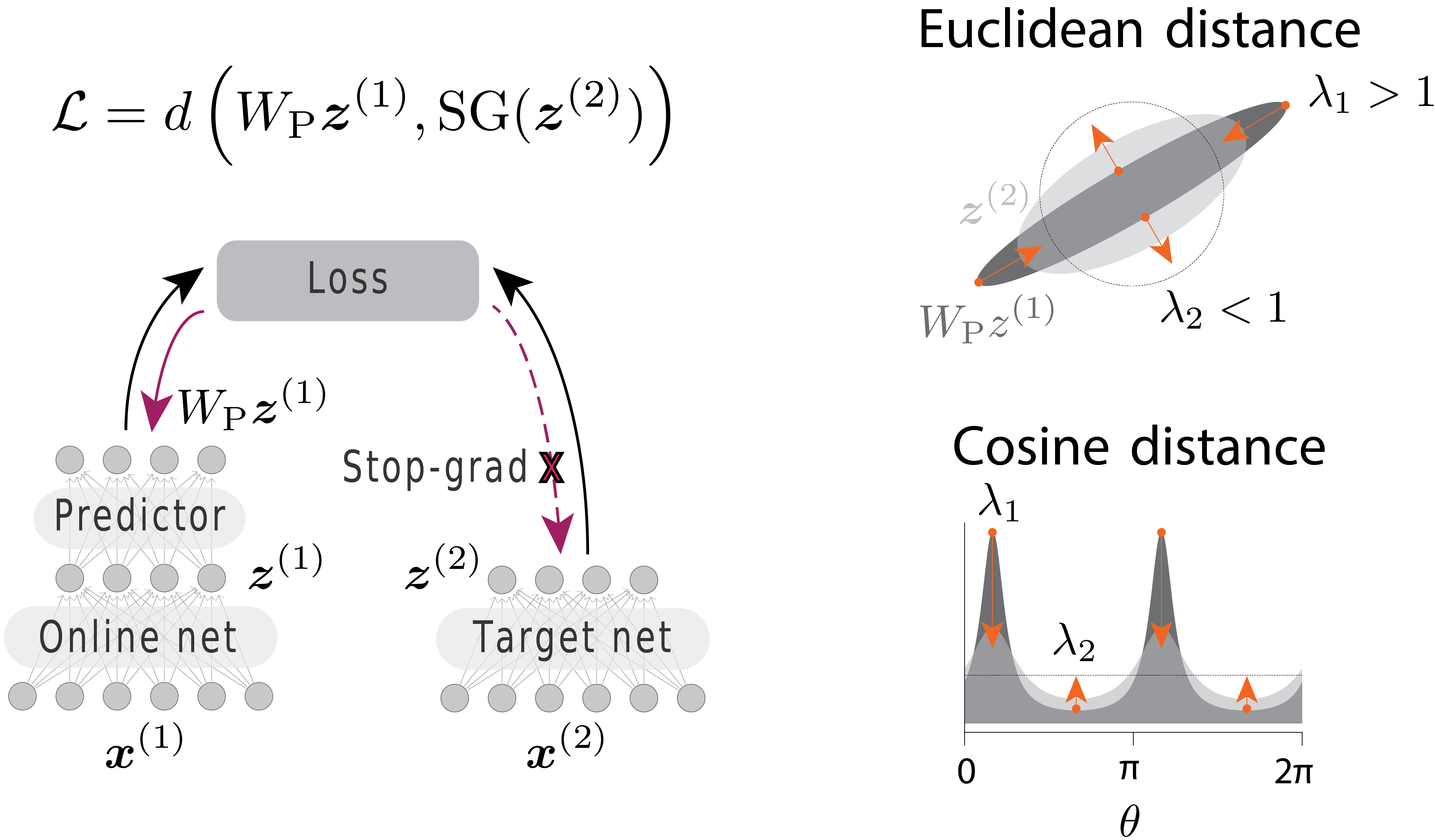

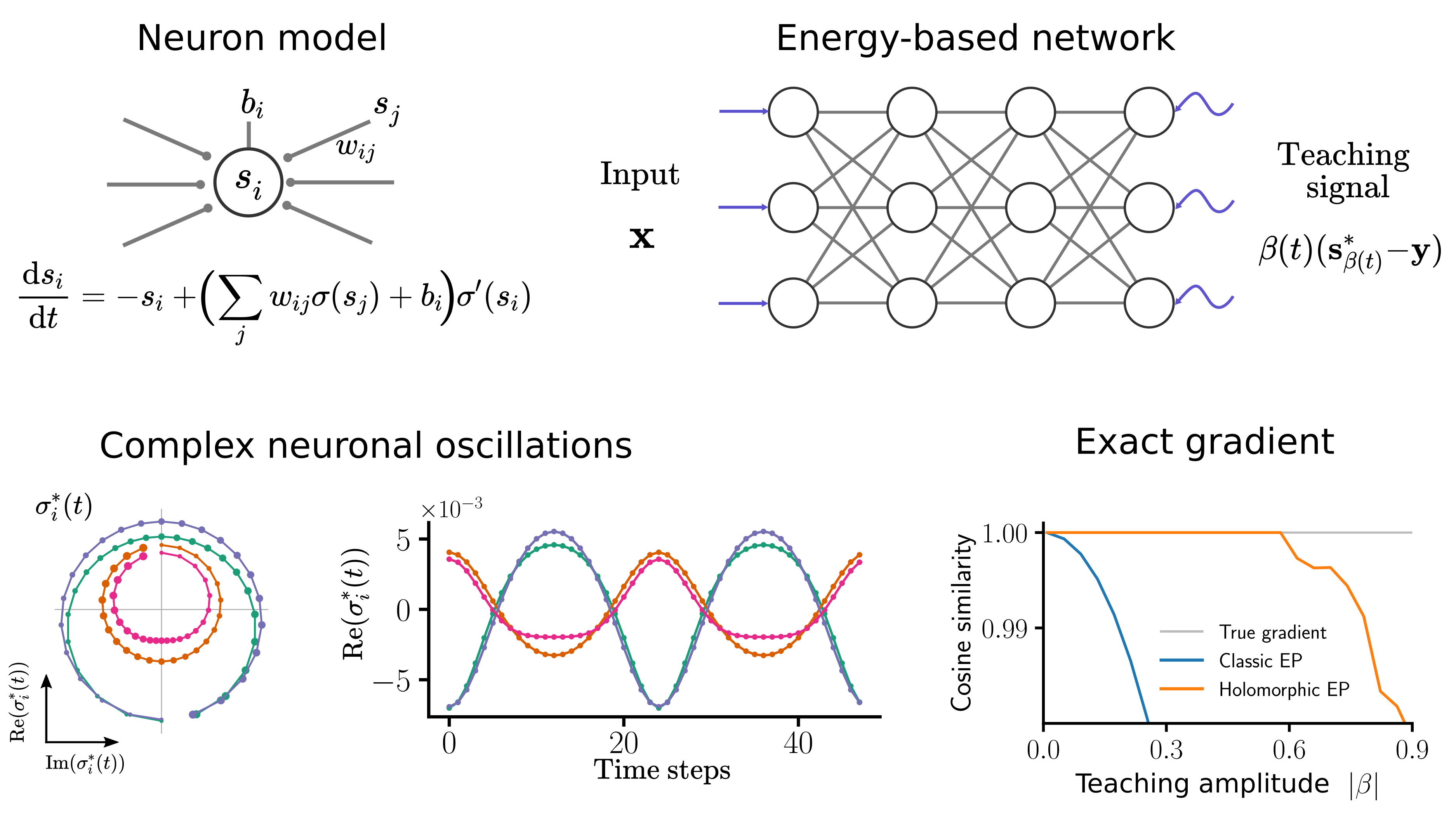

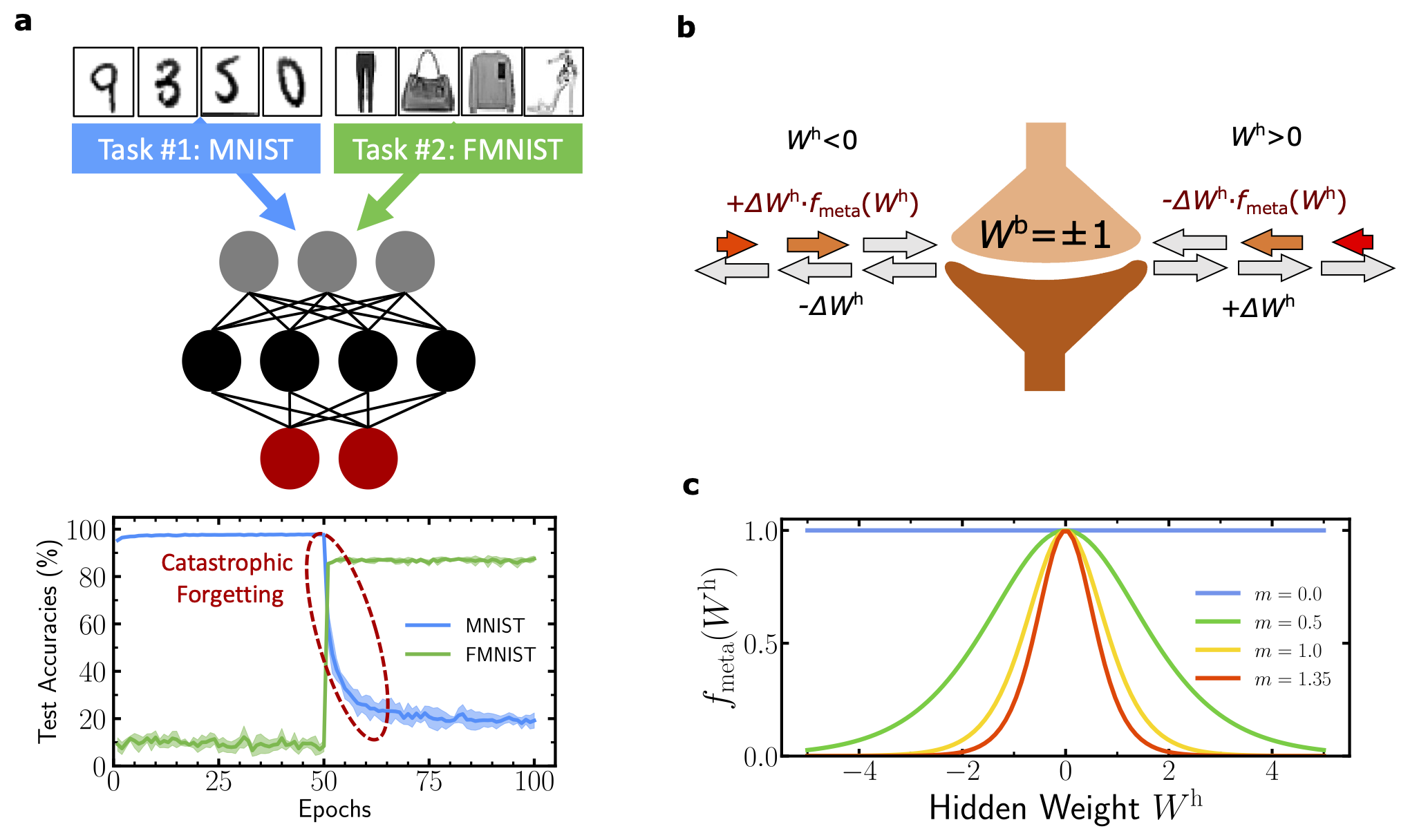

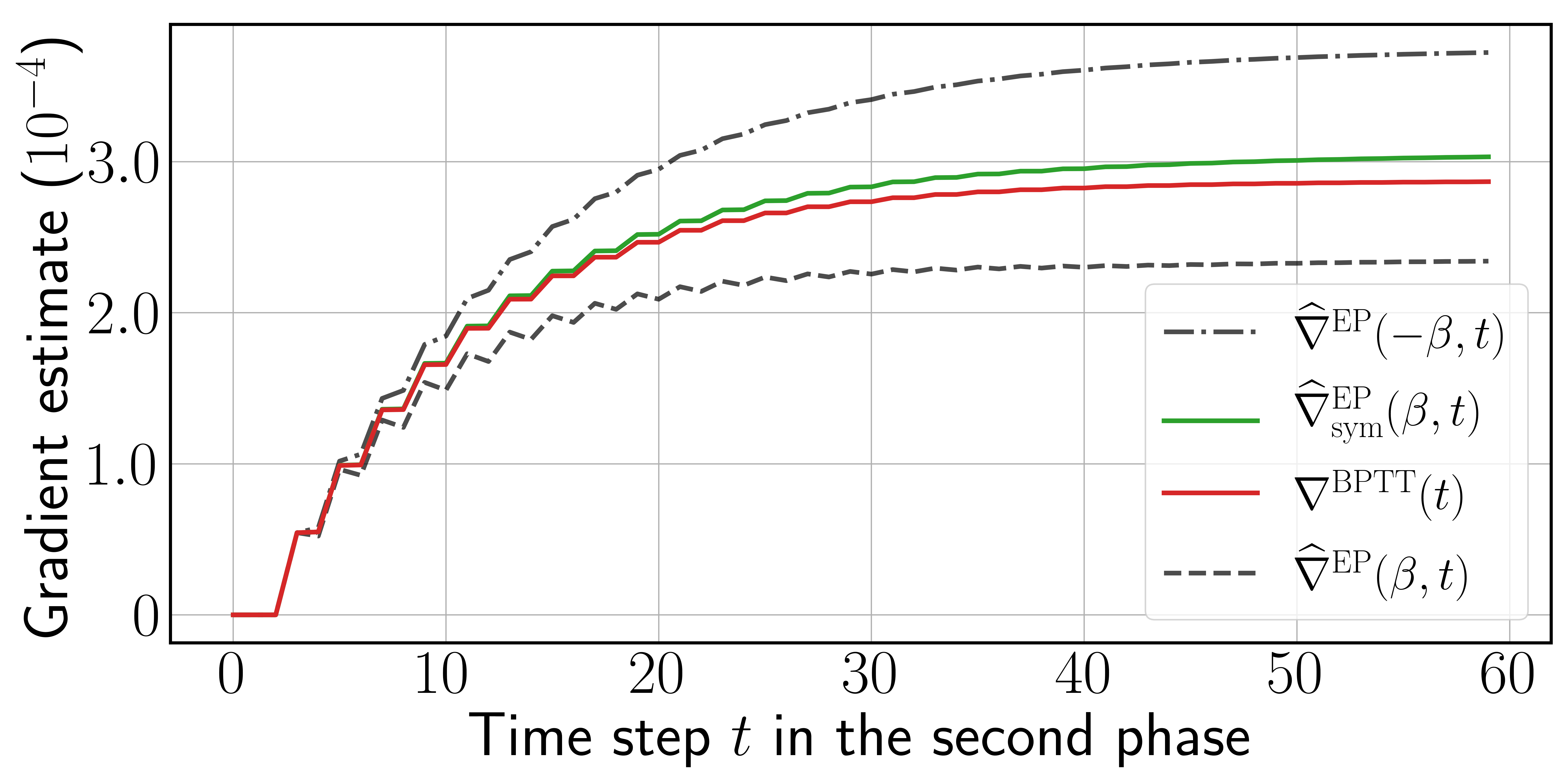

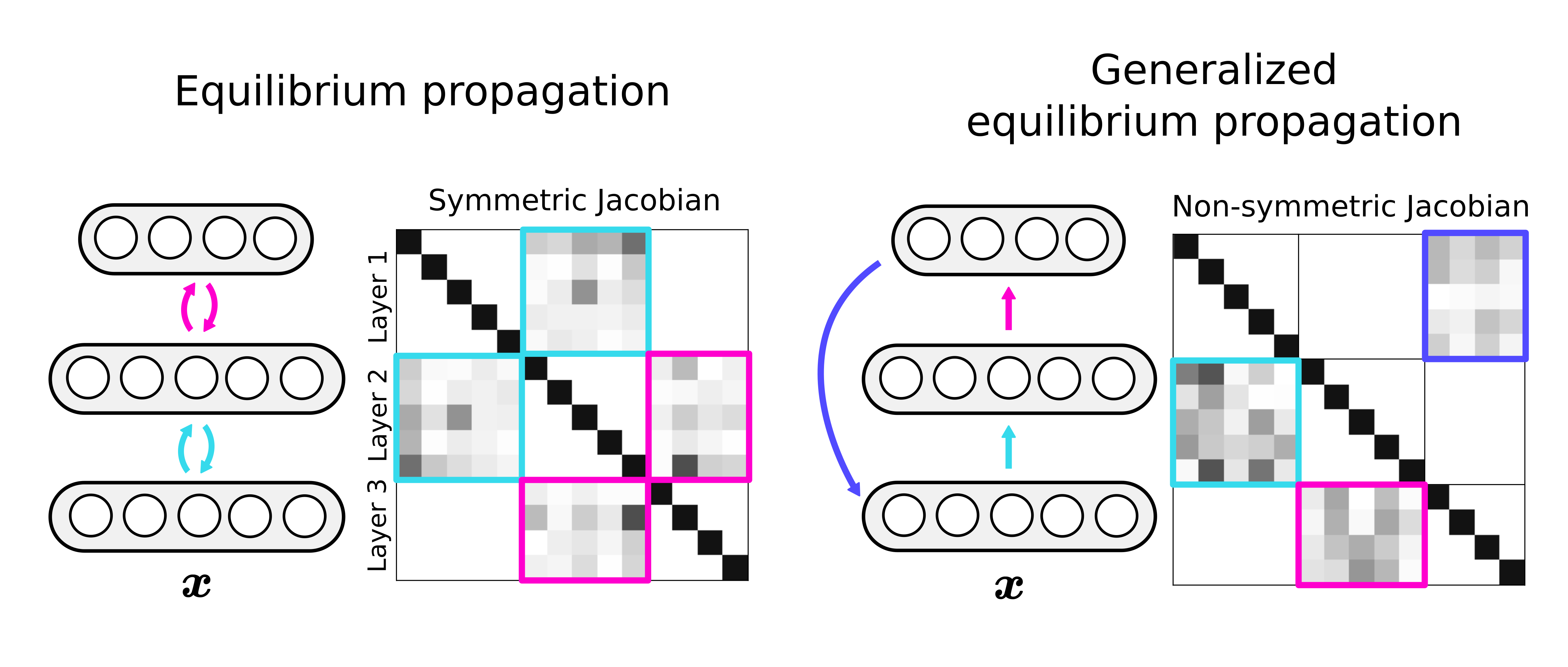

Equilibrium propagation (EP) is a local learning rule with strong theoretical links to gradient-based learning. However, it assumes an energy-based network, which enforces the synaptic connections to be symmetric. This is not only biologically implausible, but also restrictive in terms of architecture design. Here, we extend holomorphic EP to arbitrary converging dynamical systems that may not have an energy function. We quantify how the lack of energy function impacts the accuracy of the gradient estimate, and propose a simple regularization loss that maintains the network’s Jacobian closer to symmetry, which is more general than making the synapses symmetric. These improvements make generalized hEP scale to large scale vision datasets such as ImageNet 32.